Enabling Jumbo frames in VMware environments

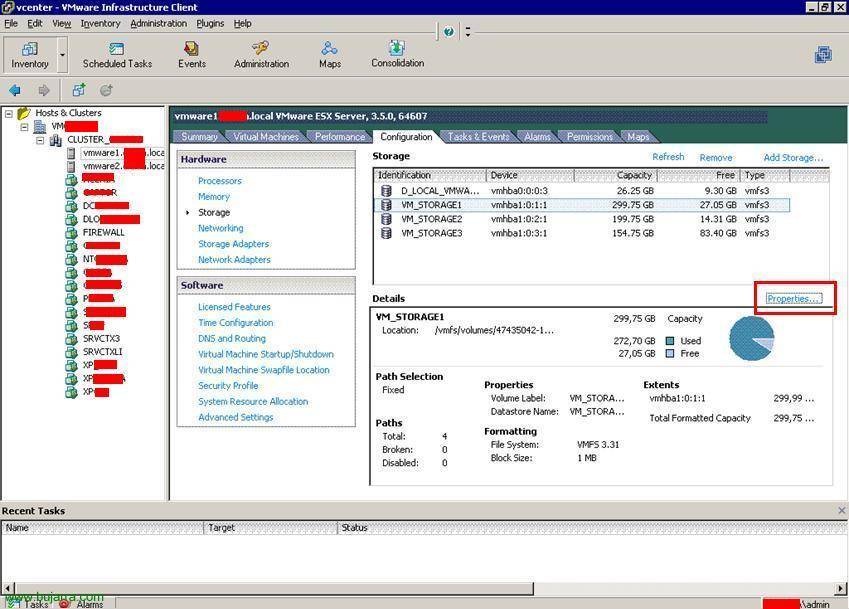

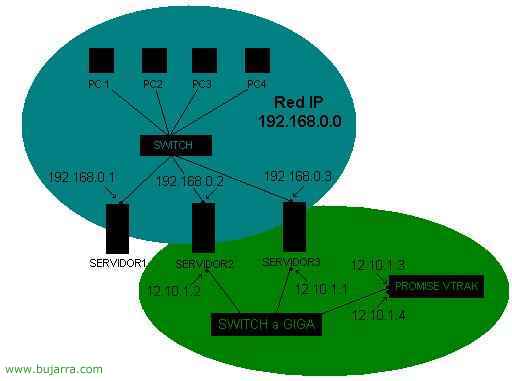

An essential feature that we should always leave configured when working with gigabit networks is to modify the MTU value (Maximum Transfer Unit – Maximum Transfer Unit) What is the size (in bytes) of the largest data unit you can send with IP, by default, LAN networks use an MTU of 1500 bytes. On VMware and all devices that make up the gigabit ethernet network (typically the iSCSI storage network) its value must be raised to 9000 bytes, We should enable it in the storage cabin, on the switch (Some switches have it enabled by default), on VMware ESX hosts / VMware ESXi (vSwitch & Port Group) and at the NIC level on equipment that is directly connected. All this in order to take advantage of the gigabit network and be able to send larger packets.